Tag: Portfolio

41 posts tagged with "Portfolio"

Multi-Projector-Mapper (MPM): Open-Source 3D Projection Mapping Software Framework

1 Introduction

The multi-projector-mapper (MPM) is an open-source software framework for 3D projection mapping using multiple projectors. It contains a basic rendering infrastructure, and interactive tools for projector calibration. For calibration, the method given in Oliver Bimber and Ramesh Raskar’s book Spatial Augmented Reality, Appendix A, is used.

The framework is the outcome of the “Projections of Reality” cluster at smartgeometry 2013, and is to be seen as a prototype that can be used for developing specialized projection mapping applications. Alternatively, the projector calibration method alone could also be used just to output the OpenGL projection and modelview matrices, which then can be used by other applications. In addition, the more generic code within the framework might as well serve as a starting point for those who want to dive into ‘pure’ Java / OpenGL coding (e.g. when coming from Processing).

Currently, at ETH Zurich’s Future Cities Laboratory we continue to work on the code. Among upcoming features will be the integration of the 3D scene analysis component, that was so far realised by a separate application. Your suggestions and feedback are welcome!

1 Source Code Repository @ GitHub

The framework is available as open-source (BSD licensed). Jump to GitHub to get the source code:

https://github.com/arisona/mpm

The repository contains an Eclipse project, including dependencies such as JOGL etc. Thus the code should run out of the box on Mac OS X, Windows and Linux.

2 Usage

The framework allows an arbitrary number of projectors - as many as your computer allows. At smartgeometry, we were using an AMD HD 7870 Eyefinity 6 with 6 mini-displayport outputs, where four outputs were used for projection mapping and one as control output:

2.1 Configuration

The code allows opening an OpenGL window for every output (for projection mapped scenes, windows without decorations are used, and they can be placed accordingly at full screen on the virtual desktop):

public MPM() {

ICalibrationModel model = new SampleCalibrationModel();

scene = new Scene();

scene.setModel(model);

scene.addView(new View(scene, 0, 10, 512, 512, "View 0", 0, 0.0, View.ViewType.CONTROL_VIEW));

scene.addView(new View(scene, 530, 0, 512, 512, "View 1", 1, 0.0, View.ViewType.PROJECTION_VIEW));

scene.addView(new View(scene, 530, 530, 512, 512, "View 2", 2, 90.0, View.ViewType.PROJECTION_VIEW));

...

}

Above code opens three windows: one control view (which contains window decorations), and two projection views (without decorations). The coordinates and window sizes in this example are just samples and need to be adjusted for a concrete case (i.e. depending on virtual desktop configuration).

2.2 Launching the Application & Calibration

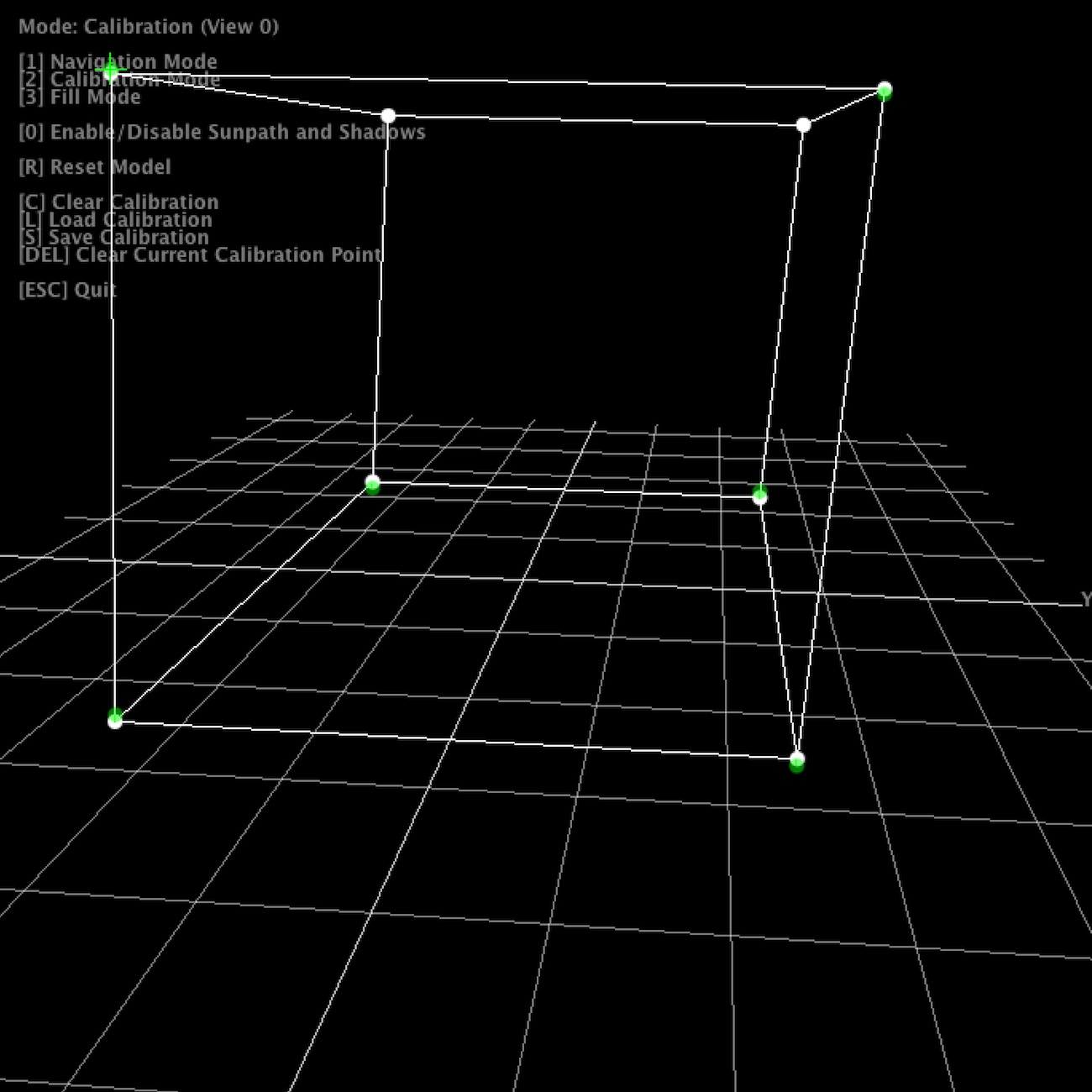

Once the application launches, all views show the default scene. The control view in addition shows the available key strokes. Pressing “2” switches to calibration mode. The views will now show the calibration model, with calibration points. Note that in calibration mode, all projection views will blank, unless their window is activated (i.e. by clicking into the window).

For calibration, 6 circular calibration points in 3D space need to be matched to the their physical counterparts. Thus, when using the default calibration model, which is a cube, a physical calibration rig corresponding to the cube needs to be used:

The individual points can now be matched by dragging them with the mouse. For fine tuning, use the cursor keys. As soon as the 6th point is selected, the scene automatically adjusts.

For first time setup, there is also a ‘fill mode’ which basically projects a white filled rectangle (with a cross hair in the middle) for each projector. This allows for easy rough adjustment of each project. Hit “3” to activate fill mode.

Once calibration is complete, press “S” to save the configuration, and “1” to return to navigation / rendering mode. On the next restart, press “L” to load the previous configuration. When in rendering mode, the actual model is shown, which by default is just a fake model, thus a piece of code that is application specific. The renderer includes shadow volume rendering (press “0” to toggle shadows), however the code is not optimised at this point.

Note that it is not necessary to use a cube as calibration rig - basically any 3D shape can be used for calibration, as long as you have a matching 3D and physical model. Simply replace the initialisation of your ICalibrationModel with an instance of your custom model.

The following YouTube video provides a short overview of the calibration and projection procedure:

3 Code Internals and Additional Features

The code is written in Java using the JOGL OpenGL bindings for rendering, and the Apache Commons Math3 Library for the matrix decomposition. Most of the it is rather straightforward, as it is intentionally kept clean and modular. Rendering to multiple windows makes use of OpenGL shared contexts. Currently, we’re working on the transition towards OpenGL 3.2 and will replace the fixed pipeline code.

In addition, the code also contains a simple geometry server, basically listening via UDP or UDP/OSC for lists of coloured triangles, which are then fed into the renderer. Using this mechanism, at smartgeometry, we build a system consisting of multiple machines doing 3D scanning, geometry analysis and rendering, by sending geometry data between them using the network. Note that this is prototype code and will be replaced with a more systematic approach in future.

4 Credits & Further Information

Concept & projection setup: Eva Friedrich & Stefan Arisona. Partially also based on earlier discussions and work of Christian Schneider & Stefan Arisona.

Code: MPM was written by Stefan Arisona, with contributions by Eva Friedrich (early prototyping, and shadow volumes) and Simon Schubiger (OSC).

Support: This software was developed in part at ETH Zurich’s Future Cities Laboratory in Singapore.

A general overview of the work at smartgeometry'13 is available at the “Projections of Reality” page.

Visualizing Interchange Patterns in Massive Movement Data (EuroVis 2013)

Authors: Wei Zeng, Chi-Wing Fu, Stefan Arisona, Huamin Qu

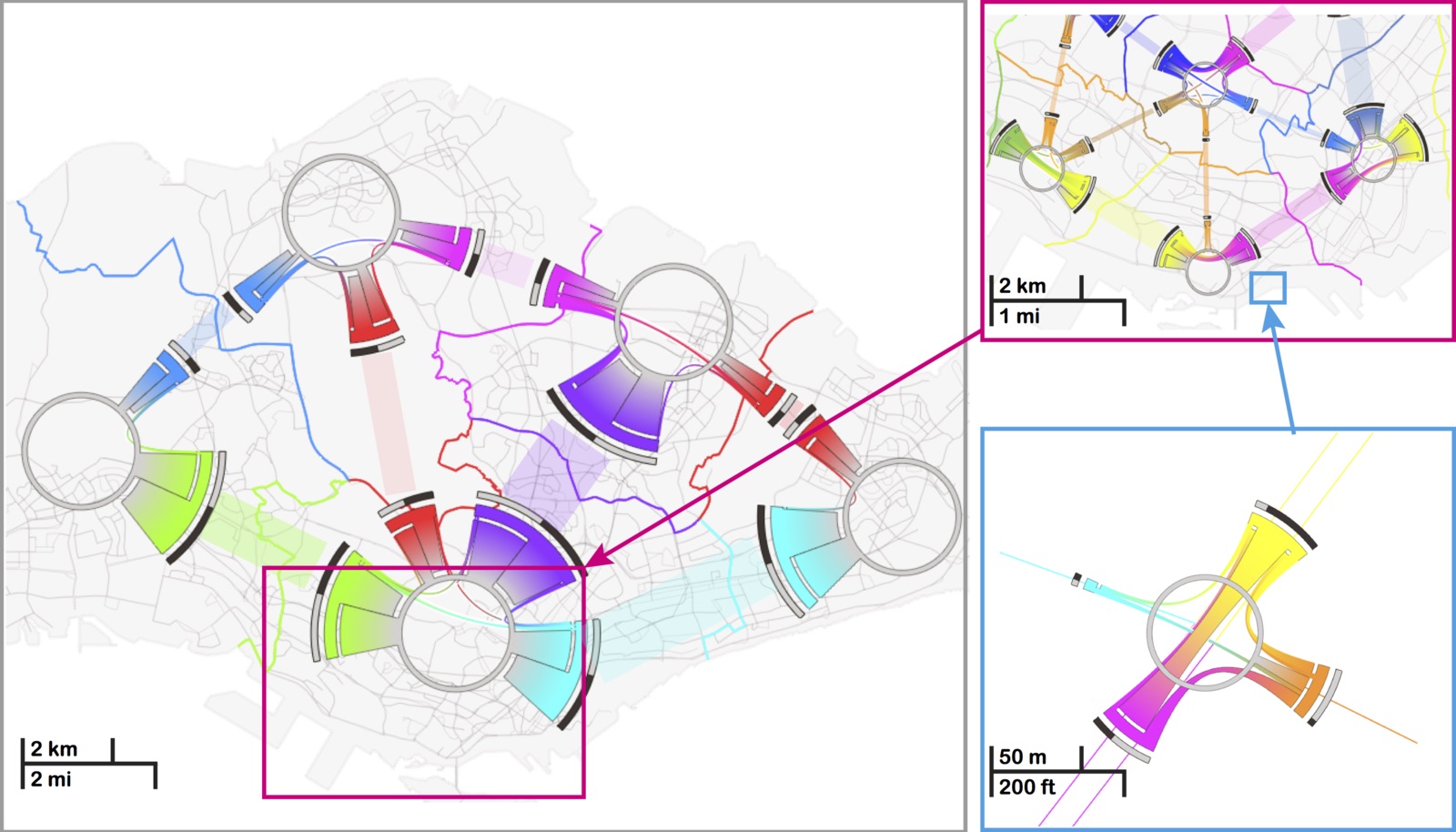

Abstract: Massive amount of movement data, such as daily trips made by millions of passengers in a city, are widely avail- able nowadays. They are a highly valuable means not only for unveiling human mobility patterns, but also for assisting transportation planning, in particular for metropolises around the world. In this paper, we focus on a novel aspect of visualizing and analyzing massive movement data, i.e., the interchange pattern, aiming at re- vealing passenger redistribution in a traffic network. We first formulate a new model of circos figure, namely the interchange circos diagram, to present interchange patterns at a junction node in a bundled fashion, and optimize the color assignments to respect the connections within and between junction nodes. Based on this, we develop a family of visual analysis techniques to help users interactively study interchange patterns in a spatiotemporal manner: 1) multi-spatial scales: from network junctions such as train stations to people flow across and between larger spatial areas; and 2) temporal changes of patterns from different times of the day. Our techniques have been applied to real movement data consisting of hundred thousands of trips, and we present also two case studies on how transportation experts worked with our interface.

https://www.youtube.com/watch?v=_QWnA1k2ZrU

Title: Visualizing Interchange Patterns in Massive Movement Data

Authors: Wei Zeng, Chi-Wing Fu, Stefan Arisona, Huamin Qu

Journal: Computer Graphics Forum

Publisher: Wiley

Year: 2013

Volume: 32(3)

Pages: 271-280

DOI: 10.1111/cgf.12114

Link: https://onlinelibrary.wiley.com/doi/10.1111/cgf.12114/abstract

Projections of Reality (smartgeometry'13)

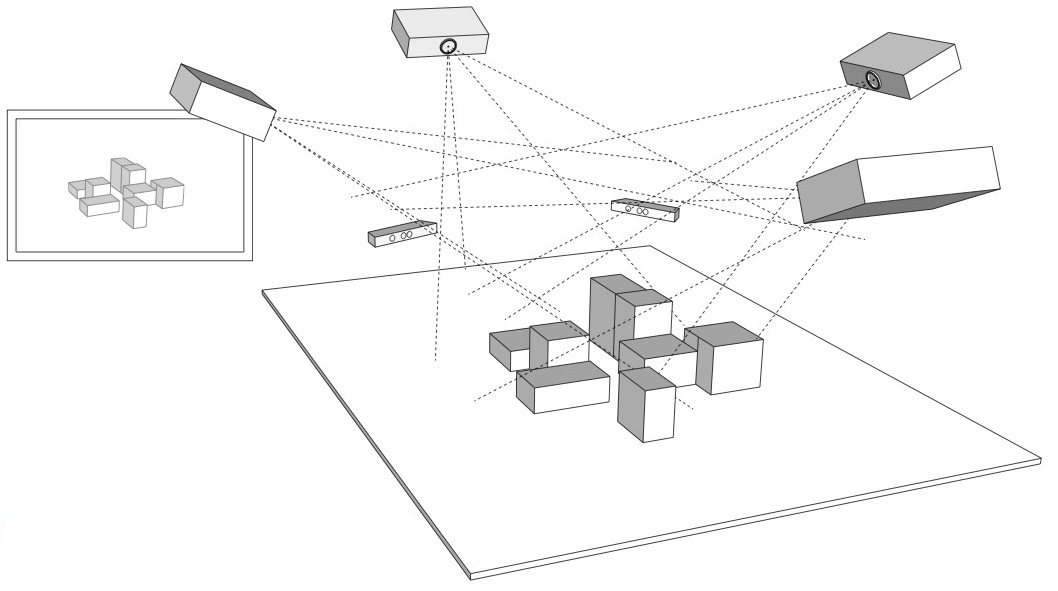

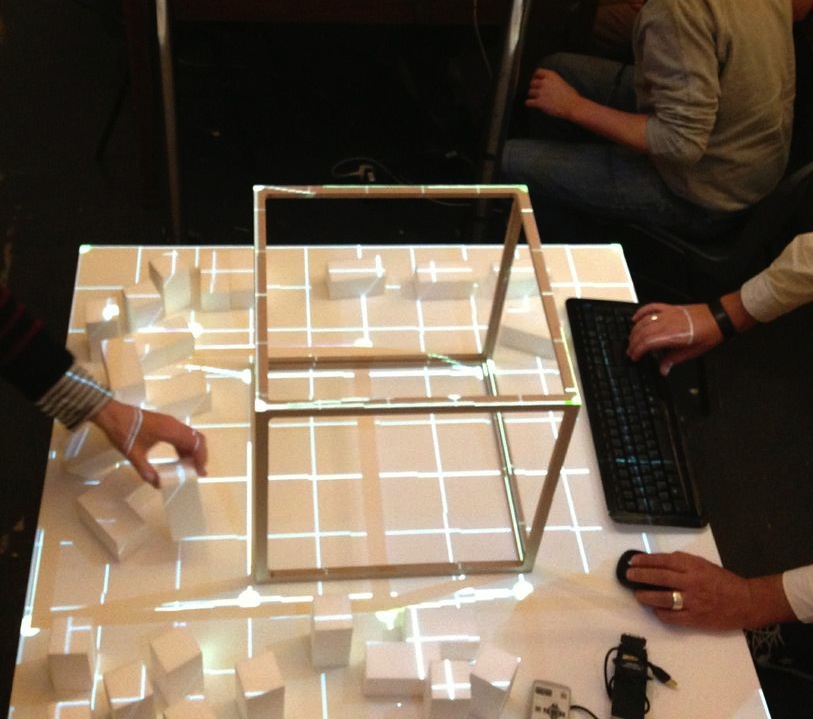

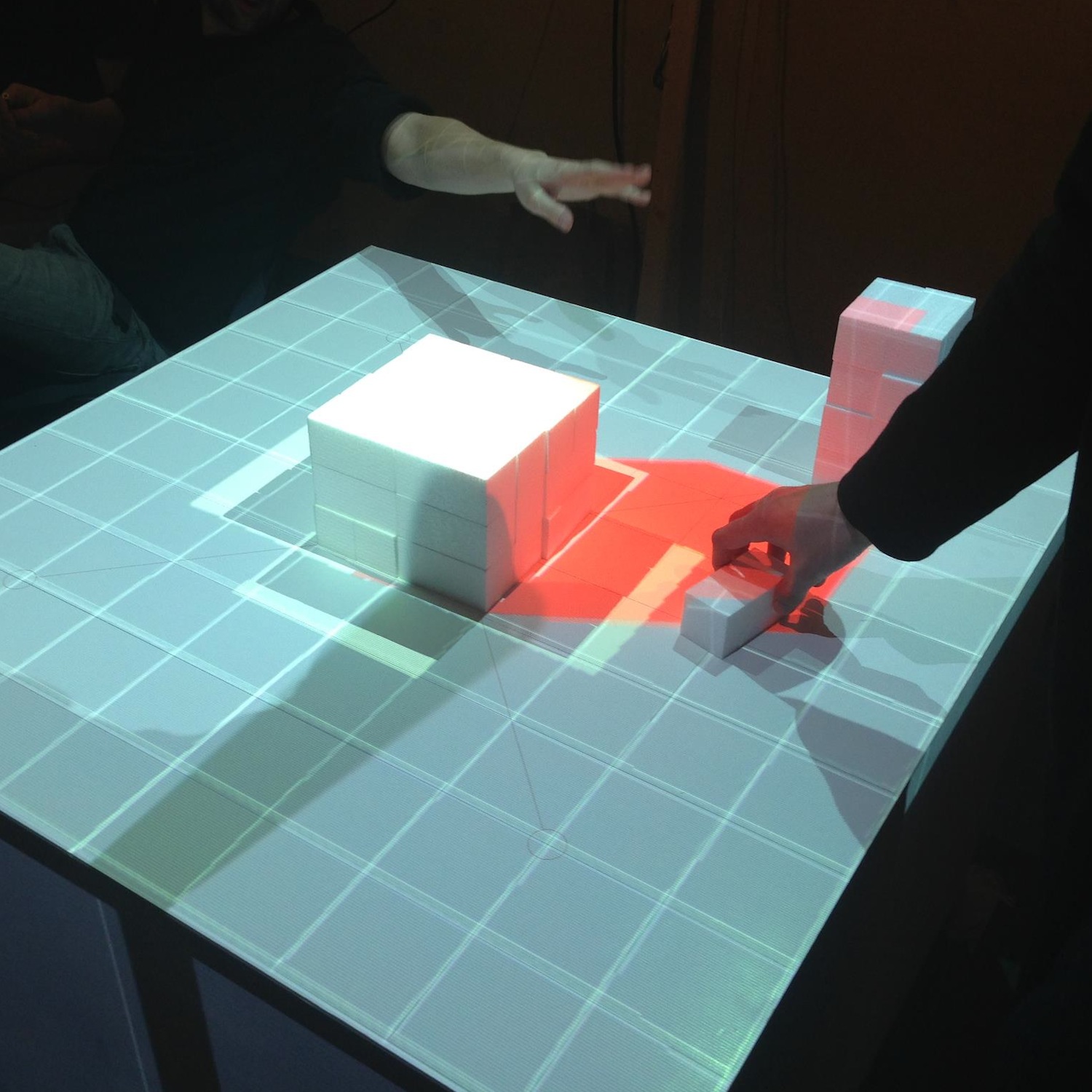

Projections of Reality engages in augmenting design processes involving physical models with real time spatial analysis. The work was initiated at smartgeometry'13 at UCL London in April 2013 and is currently continued at ETH Zurich’s Value Lab Asia in Singapore.

The work explores techniques of real time spatial analysis of architectural and urban design models: how physical models can be augmented with real-time 3D capture and analysis, enabling the architect to interact with the physical model whilst obtaining feedback from a computational analysis. We investigate possible workflows that close the cycle of designing and model making, analysing the design and feeding results back into the design cycle by projecting them back onto the physical model.

On a technical level, we are addressing the challenge of dealing with complex, unstructured data from real time scanning, e.g. a point cloud, which needs processing before it can be used in urban analysis. We will investigate strategies to extract information of the urban form targeted for design processes, using real time scanning devices (Microsoft Kinect, hand held laser scanners, etc.), open source 3D reconstruction software (reconstructMeQT) and post-processing of the input through parametric modelling (e.g. Processing, Rhino scripting, Generative Components).

The goal of the workshop at smartgeometry'13 was to create a working prototype of a physical urban model that is augmented with real-time analysis. We worked with the cluster participants to formulate a design concept of the prototype and to break down the challenge into individual modules, e.g. analysing and cleaning the geometry, algorithms for real time analysis, projecting imagery onto the physical model.

Cluster Champions: Stefan Arisona, Eva Friedrich, Bruno Moser, Dominik Nüssen, Lukas Treyer

Cluster Participants: Piotr Baszynski, Moritz Cramer, Peter Ferguson, Rich Maddock, Teruyuki Nomura, Jessica In, Graham Shawcross, Reeti Sompura, Tsung-Hsien Wang

Year: 2013

Book: Digital Urban Modeling and Simulation

Edited by Stefan Arisona, Gideon Aschwanden, Jan Halatsch, and Peter Wonka, 2012

About this Book

This book is thematically positioned at the intersections of Urban Design, Architecture, Civil Engineering and Computer Science, and it has the goal to provide specialists coming from respective fields a multi-angle overview of state-of-the-art work currently being carried out. It addresses both newcomers who wish to obtain more knowledge about this growing area of interest, as well as established researchers and practitioners who want to keep up to date. In terms of organization, the volume starts out with chapters looking at the domain at a wide-angle and then moves focus towards technical viewpoints and approaches. (Excerpt from preface by Stefan Arisona).

Contents

Part I: Introduction

A Planning Environment for the Design of Future Cities - Gerhard Schmitt

Calculating Cities - Bharat Dave

The City as a Socio-technical System: A Spatial Reformulation in the Light of the Levels Problem and the Parallel Problem - Bill Hillier

Technology-Augmented Changes in the Design and Delivery of the Built Environment - Martin Riese

Part II: Parametric Models and Information Modeling

City Induction: A Model for Formulating, Generating, and Evaluating Urban Designs - José P. Duarte, José N. Beirão, Nuno Montenegro, and Jorge Gil

Sortal Grammars for Urban Design: A Sortal Approach to Urban Data Modeling and Generation - Rudi Stouffs, José N. Beirão, and José P. Duarte

Sort Machines - Thomas Grasl and Athanassios Economou

Modeling Water Use for Sustainable Urban Design - Ramesh Krishnamurti, Tajin Biswas, and Tsung-Hsien Wang

Part III: Behavior Modeling and Simulation

Simulation Heuristics for Urban Design - Christian Derix, Åsmund Gamlesæter, Pablo Miranda, Lucy Helme, and Karl Kropf

Running Urban Microsimulations Consistently with Real-World Data - Gunnar Flötteröod and Michel Bierlaire

Urban Energy Flow Modelling: A Data-Aware Approach - Diane Perez and Darren Robinson

Interactive Large-Scale Crowd Simulation - Dinesh Manocha and Ming C. Lin

An Information Theoretical Approach to Crowd Simulation - Cagatay Turkay, Emre Koc, and Selim Balcisoy

Integrating Urban Simulation and Visualization - Daniel G. Aliaga

Part IV: Visualization, Collaboration and Interaction

Visualization and Decision Support Tools in Urban Planning - Antje Kunze, Remo Burkhard, Serge Gebhardt, and Bige Tuncer

Spatiotemporal Visualisation: A Survey and Outlook - Chen Zhong, Tao Wang, Wei Zeng, and Stefan Arisona

Multi-touch Wall Displays for Informational and Interactive Collaborative Space - Ian Vince McLoughlin, Li Ming Ang, and Wooi Boon Goh

Testing Guide Signs’ Visibility for Pedestrians in Motion by an Immersive Visual Simulation System - Ryuzo Ohno and Yohei Wada

Publication Information

Publisher: Springer, Berlin Heidelberg

Series: Communications in Computer and Information Science, Vol. 242

Editors: Arisona, S.; Aschwanden, G.; Halatsch, J.; Wonka, P.

Year: 2012

ISBN: 978-3-642-29757-1

Link: https://www.springeronline.com/978-3-642-29757-1

ETH Zurich's Singapore-ETH Centre and the Future Cities Laboratory

The Singapore-ETH Centre for Global Environmental Sustainability (SEC) in Singapore was established as a collaboration between the National Research Foundation of Singapore and ETH Zurich in 2010. It is an institution that frames a number of research programmes, the first of which is the Future Cities Laboratory (FCL). The SEC strengthens the capacity of Singapore and Switzerland to research, understand and actively respond to the challenges of global environmental sustainability. It is motivated by an aspiration to realise the highest potentials for present and future societies. SEC serves as an intellectual hub for research, scholarship, entrepreneurship, postgraduate and postdoctoral training. It actively collaborates with local universities and research institutes and engages researchers with industry to facilitate technology transfer for the benefit of the public.

https://www.futurecities.ethz.ch/

I have been involved in Future Cities Laboratory since my return from UCSB in October 2008, and was the second PI to move to Singapore in October 2010, at that time located at temporary offices at the NUS School of Design and Environment. Main tasks included general ramp up of the centre, establishing technical infrastructure, hiring and supervision of PhD students. In January 2012, SEC moved into its permanent offices at the Singapore National Research Foundation (NRF) Campus for Research Excellence and Technological Enterprise (CREATE). I was responsible for the design and implementation of the [[ValueLabAsia |Value Lab Asia]], which was built in only three months and has been in operation since March 2012.

The Simulation Platform Research Module (“Module IX”): Service and Research for Future Planning Environments

Informing design and decision-making processes with new techniques and approaches to data acquisition, information visualisation and simulation for urban sustainability.

In science, simulations have assumed a critical role in mediating between theory and practical experiment. In architecture, simulations increasingly function in a similar way to help integrate the design, construction, and lifecycle management of buildings. And in urban planning, simulations have become an indispensable method for generating and analysing design and planning scenarios. The growing importance of simulation for these fields has been stimulated by a rapid growth in the availability of urban-related data. Despite this, most current simulations are capable of capturing and activating only a small fraction of the available data. Addressing this lack is both a matter of generating appropriate computer power to process the vast bodies of data, and accessing the data itself that is often held in hard to access databases. To contemplate possible advanced urban planning techniques that activate live and dynamic data, demonstrates that existing tools, such as GIS, are ill equipped to exploit the analytical and communicative potentials of this growing volume of urban data.

The Simulation Platform examines how to effectively deal with the growing volume of urban-related data. It investigates new techniques and instruments for the acquisition, organisation, retrieval, interaction, and visualisation of such data. It will propose techniques for designers, decision-makers and stakeholders to access necessary data about the city in innovative and dynamic ways. It does in two ways. First, it supports other research modules in the Future Cities Laboratory by supplying services such as data acquisition methods and visualisation facilities. Second, building on these services it will conduct original research on advanced and dynamic modelling, visualisation and simulation techniques that aim to better understand and intervene in the complex processes that shape contemporary cities.

Module Leader & PI: Prof Dr Gerhard Schmitt

Module Coordinator & PI: Assoc Prof (Adj) Dr Stefan Arisona

PIs: Prof Dr Armin Grün, Prof Dr Ludger Hovestadt, Prof Dr Ian Smith

Affiliated Faculty: Assoc Prof Dr Tat Jen Cham (NTU), Assoc Prof Dr Chandra Sekhar (NUS), Assoc Prof Dr Ian McLoughlin (NTU), Asst Prof Dr Philip Chi-Wing Fu, Asst Prof Dr Benny Raphael (NUS), Prof Dr Luc Van Gool (ETH Zurich), Asst Prof Dr Jianxin Wu (NTU)

PostDocs: Dr Matthias Berger, Dr Xianfeng Huang, Dr Tao Wang

PhD Students: Gideon Aschwanden, Dengxin Dai, Eva Friedrich, Vahid Moosavi, Maria Papadopoulou, Rongjun Qin, Dongyoun Shin, Sing Kuang Tan, Didier Vernay, Wei Zeng, Chen Zhong

IT Engineers: Daniel Sin, Chan Lwin