Tag: Research

40 posts tagged with "Research"

The Allosphere Research Facility

I was working on the Allosphere project during my stay at UCSB. Mostly in terms of experimenting with projection warping in a full-dome environment, and with bringing in content, especially through the Soundium platform.

The AlloSphere is a large, immersive, multimedia and multimodal instrument for scientific and artistic exploration being built at UC Santa Barbara. Physically, the AlloSphere is a three-story cubic space housing a large perforated metal sphere that serves as a display surface. A bridge cuts through the center of the sphere from the second floor and comfortably holds up to 25 participants. The first generation AlloSphere instrumentation design includes 14 high-resolution stereo video projectors to light up the complete spherical display surface, 256 speakers distributed outside the surface to provide high quality spatial sound, a suite of sensors and interaction devices to enable rich user interaction with the data and simulations, and the computing infrastructure to enable the high-volume computations necessary to provide a rich visual, aural, and interactive experience for the user. When fully equipped, the AlloSphere will be one of the largest scientific instruments in the world; it will also serve as an ongoing research testbed for several important areas of computing, such as scientific visualization, numerical simulations, large scale sensor networks, high-performance computing, data mining, knowledge discovery, multimedia systems, and human-computer interaction. It will be a unique immersive exploration environment, with a full surrounding sphere of high quality stereo video and spatial sound and user tracking for rich interaction. It will support rich interaction and exploration of a single user, small groups, or small classrooms.

The AlloSphere differs from conventional virtual reality environments, such as a CAVE or a hemispherical immersive theater, by its seamless surround-view capabilities and its focus on multiple sensory modalities and interaction. It enables much higher levels of immersion and user/researcher participation than existing immersive environments. The AlloSphere research landscape comprises two general directions: (1) computing research, which includes audio and visual multimedia visualization, human computer interaction, and computer systems research focused largely on the Allosphere itself – i.e., pushing the state of the art in these areas to create the most advanced and effective immersive visualization environment possible; and (2) applications research, which describes the integration of Allosphere technologies to scientific and engineering problems to produce domain-specific applications for analysis and exploration in areas such as nanotechnology, biochemistry, quantum computing, brain imaging, geoscience, and large scale design. These two types of research activities are different yet tightly coupled, feeding back to one another. Computing research produces and improves the enabling technologies for the applications research, which in turn drive, guide, and re-inform the computing research.

https://www.allosphere.ucsb.edu/

Location: California NanoSystems Institute, University of California, Santa Barbara

Period: 2007 - 2008

SQEAK - Real-time Multiuser Interaction Using Cellphones

This research project explored one approach to providing mobile phone users with a simple low cost real-time user interface allowing them to control highly interactive public space applications involving a single user or a large number of simultaneous users.

In order to sense accurately the real-time hand movement gestures of mobile phone users, the method uses miniature accelerometers that send the orientation signals over the network’s audio channel to a central computer for signal processing and application delivery. This affords that there is minimal delay, minimal connection protocol incompatibility and minimal mobile phone type or version discrimination. Without the need for mass user compliance, large numbers of users could begin to control public space cultural and entertainment applications using simple gesture movements.

D. Majoe, S. Schubiger-Banz, A. Clay, and S. Arisona. 2007. SQEAK: A Mobile Multi Platform Phone and Networks Gesture Sensor. In: Proceedings of the Second International Conference on Pervasive Computing and Applications (ICPCA07). Birmingham, UK, July 26 - 27.

Research: Project carried out at ETH Zurich

Location: ETH Zurich, Switzerland

Timeframe: 2006 - 2007

Realisation: Stefan Arisona, Simon Schubiger, Dennis Majoe, Art Clay

Collaborator: Swisscom Innovations

The Digital Marionette

The interactive installation Digital Marionette impressively shows the audience the look and feel of a puppet in the multimedia era: The nicely dressed wooden marionette is replaced by a Lara Croft - like character; the traditional strings attached to puppet control handles emerge into a network of computer cables. The installation is currently exhibited at the Ars Electronica Center in Linz.

The installation consists of a projection of a digital face, which can be controlled by the visitors. The puppet can be made talking via speech input, and the classical puppet controls serve as controllers for head direction and face emotions, such as joy, anger, or sadness. The whole artistic concept was designed and realised in an interdisciplinary manner, incorporating art historical facts about marionettes, the architectural space, interaction design, and state of the art research results from computer graphics and speech recognition.

Concept

The translation from old to new, from analogue to digital, takes place via the most popular computer input device: the mouse. The puppet control handles are attached to sliding strips of mousepads and eight computer mice track movements of the individual strings. This approach is at the same time efficient, low-cost and easily understandable by the non-expert visitor. Speech input is realised via speech recognition, where the recognised phonemes are mapped to a set of facial expressions and visemes.

Exhibition at Museum Bellerive, Zurich, Switzerland, 2004

The first exhibition of the Marionette was realised in 2004 by the Corebounce Art Collective in cooperation with Christian Iten (interface realisation), Swisscom Innovations (Swiss-German voice recognition), and ETH Zürich (real-time face animation), and Eva Afuhs and Sergio Cavero (Curators, Museum Bellerive, Zürich).

Exhibition at Ars Electronica Centre, Linz, Austria, 2006 - 2008

An augmented permanent version of the installation was presented in the entrace hall of the world-famous Ars Electronica Center in Linz. It was realised by the Corebounce Art Collective with technical support from Gerhard Grafinger of the Ars Electronica Center. Furthermore we thank Ellen Fethke, Gerold Hofstadler and Nicoletta Blacher of Ars Electronica; and Jürg Gutknecht and Luc Van Gool of ETH Zürich.

Bellerive Installation Video

3D Concept Video

Additional Information

Exhibition: Museum Bellerive

Location: Zurich, Switzerland

Period: Jun 11 - Sep 12 2004

Exhibition: Ars Electronic Centre

Location: Linz, Austria

Period: Sep 2006 - Sep 2008

Concept and realisation: Corebounce Art Collective (Pascal Mueller, Stefan Arisona, Simon Schubiger, Matthias Specht)

In 15 Minutes Everybody Will Be Famous

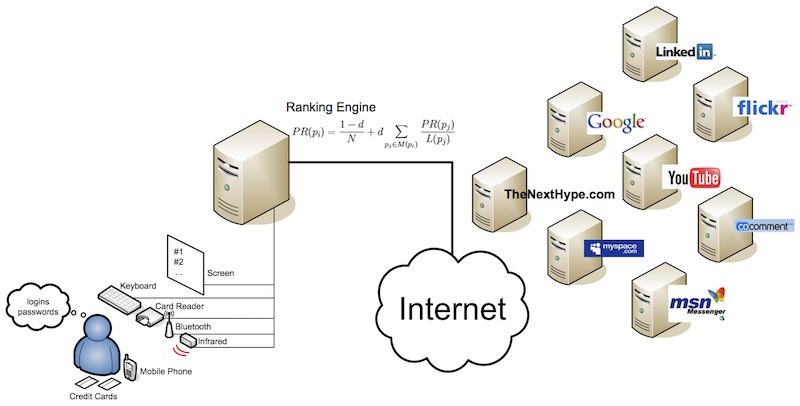

I recently (2012) stepped across this submission again, and was surprised and also feeling a bit depressed that we never fully realised this piece at that time - this was before facebook & co took off, see “the next hype” box in the picture below. Anyway, the concept is still here, and I believe the assertion is still valid.

We all pursue a multitude of lives in the electronic realm. A schizophrenia, that splinters out identity across communities, blogs, and portals. In every on-line community we cultivate our alter ego with a biography, network, reputation and fame. The writer Joseph Campbell once said we were all potential heroes. Now, with the help of a webcam or blog, we are all potential celebrities. When he got tired of his original quote, Andy Warhol gave with his adapted variant of the “15 minutes of fame” hope to the insignificant, but was at the same time laughing at the famous. But how does this relate to the on-line world? How do we compare to other on-liners? Is there a reward for all the time and enthusiasm we sacrifice to screen and keyboard? Is our on-line existence sufficiently important to be a celebrity? Will we be famous to only fifteen people, as Warhol’s original quote has been adapted once more in the context of emerging on-line phenomena?

Find it out with “In 15 minutes everybody will be famous.” Attracted by curiosity and self(over)estimation on-liners are drawn to our system. They are asked to present as many artefacts (mobile phone, credit cards, usernames, passwords, etc.) as possible to the system. The system will automatically discover their online trails with the information drawn from the artefacts. A variant of Google’s page rank algorithm (our people rank algorithm) calculates individual on-line celebrity values based on social networks and on-line behaviour. The result is a single value that sums up ones entire on-line existence. There are obvious ways to improve an on-line existence. Come back - with the right network everybody can be famous in 15 minutes.

Conceived: July 2006, submitted to Prix Ars in March 2007

Concept and implementation: Corebounce Art Collective

Project Details (Excerpt from the Submission)

Objectives

The forefront goal of this project is to sum up your online existence in one single numeric value and thus render your existence comparable to others. But how much information are you willing to hand out for this single number? Loosing privacy for fame is a creeping process in real life – here it is rendered explicit. What are you ready to reveal? Your e-mail address? Your MySpace credentials? Your contacts on your mobile phone? Your credit card? The more you offer the more likely the system gets the big picture across communities and metaverses. And what about elevating your celebrity value by faking an online presence? What about stealing credentials? Without being asked directly, the user is forced to think about these questions on his way to fame and reflect on his motivations. In the process one will not only learn how much or little one matters in the electronic universe but also possibly rediscover long forgotten traces left throughout electronic systems.

Language and context

The interaction is realized with simple, universally understood iconic dialogs and thus language independent. There are no inherent geographical or cultural restrictions whatsoever. However, due to the selection of supported online communities the implementation is currently biased towards the western hemisphere.

Project History

This project roots in various scientific, technical and artistic ancestors. It borrows the human cognition inspired approach to information discovery form the thesis “Automatic Software Configuration” (https://diuf.unifr.ch/pai/publications/2002/paper/Schubiger-PhD02.pdf). Ubiquitous access to mobile phone data is realized with a variant of SICAP’s POS module (https://www.sicap.com/). Close contacts to the masterminds of cocomment (https://www.cocomment.com/) help understanding the technical and social challenges in today’s on-line communities. Corebounce’s long track of public appearances and expertise in interactive installations heavily influenced the interaction design. Especially the experience gained through RipMyDisk motivated the implementation of this project.

People

The driving people behind this project are the founding members of Corebounce Association, which were also deeply involved in the forerunners: Pascal Müller, Stefan Arisona, Simon Schubiger-Banz, and Matthias Specht. The original concept, as well as the software development was carried out by them. The project is intended to be instantiated either as an installation or as an online platform. The forerunner RipMyDisk was open to the public during Interactive Futures 2006. The software is entirely based on Soundium / Decklight which is partially open source.

Lessons learned

From a technical perspective, RipMyDisk demonstrated the feasibility but also the limits of access to physical artefacts linked to the online world. While we continuously improve on artefact support, we also integrated additional input devices such as card readers and a keyboard. Whereas RipMyDisk was a specialized system tailored for a specific event, the new system is greatly modular to accommodate a wide range of community software and web interfaces to have a maximum reach into the online world. From a social viewpoint two remarkable observations were made: First, technical limitations were circumvented by ad-hoc social networks that emerged around “gateway” artefacts. We call a “gateway artefact” a device that can be used to feed information into the system without being itself a representation of real life identity. Second, privacy is at least in an installation setting of little or no concern. The urge to participate, here enforced by the urge to become famous temporally blinds the user in respect to privacy and security.

Technical Information

The Soundium research platform served as the technological basis for the realization of the project. As indicated earlier Soundium has roots in various computer science research domains ranging from software engineering, human-computer interaction techniques to real-time multimedia processing and computer graphics. The development of platform was initiated by Corebounce in 1999 and has since then been supported by various Swiss universities and companies. One of the principal design goals of Soundium was to provide a highly modular software architecture for the rapid realisation of scientific experiments as well as artistic ideas. Besides of this project and its basis, RipMyDisk, Soundium has been intensely been applied for live visuals and VJ performances, as well as interactive media art installations (for example the Digital Marionette, currently exhibited at the Ars Electronica Center). Soundium runs on Linux and is freely available.

Selected References (Related to the Soundium Platform)

P. Müller, S. Arisona, K. A. Huff and B. Lintermann. 2007. Digital Art Techniques. ACM SIGGRAPH 2007, San Diego, CA, USA. To appear in: Course Notes of the ACM SIGGRAPH 2007, ACM Press.

P. Müller, S. Arisona, S. Schubiger and M. Specht. 2007. Interactive Editing of Live Visuals. To appear in: J. Braz, A. Ranchordas, H. Araújo and J. Jorge (eds). Computer Graphics and Computer Vision: Theory and Applications I, Springer Verlag.

P. Müller, S. Arisona, S. Schubiger, and M. Specht (Corebounce Art Collective). 2006. Digital Marionette. In: Simplicity - The Art of Complexity. Ars Electronica 2006: 348 - 349.

S. Arisona, S. Schubiger, and M. Specht. 2006. A Real-Time Multimedia Composition Layer. Proceedings of AMCMM, Workshop on Audio and Music Computing for Multimedia. ACM Multimedia 2006, Santa Barbara, October 23-27.

S. Schubiger and S. Arisona. 2003. Soundium2: An Interactive Multimedia Playground. In: Proceedings of the 2003 International Computer Music Conference, ICMA, San Francisco.

Solutions

The system draws it vigour from three key elements:

- Ubiquitous access to personal artefacts. Through various interfaces to the physical world (card reader, infrared port, Bluetooth, keyboard) artefacts can be transferred to the system in an ubiquitous way.

- Modular integration of existing and emerging community services. A unique automatic site crawling technique enables the integration of arbitrary on-line communities, portals, blogs, newsgroups, etc. in a modular way. The crawler isolates individuals, relates them, and extracts their social networks.

- Social ranking is largely based on Google’s page rank algorithm that is adapted in our system to rank people. Page rank basically measures the importance of a page by the links pointing to that page and the rank of the origins of these links. “People rank” is measured by the number of people that refer to an individual in combination with the rank of the referrers. Additionally, on-line activity over time is integrated into the people rank to take into consideration the volatile character of fame.

Implementation

The research instance of the project is currently running at ETH Zurich’s Computer Systems Institute. This is where the software further evolves. Several partners are using parts of the code for rapid-prototyping of their own research projects. Corebounce will be showing artistic applications of the software at this year’s TweakFest (Zurich, https://www.tweakfest.ch) and Digital Art Weeks (Zurich, https://www.digitalartweeks.ethz.ch).

Users

The project addresses everyone with an Internet identity, and/or those that are actively involved in social or technological aspects of digital communities.

License

Some parts of the software are already open-source, or will be released as open-source within a short timeframe. Some parts are currently closed-source, but available for free. Current licensees are mainly research institutions involved in computer systems, network technology, as well as multimedia and digital art.

Statement of Reasons

The main contribution of our project is the novel way to look at communities and to explore the interconnections between our physical identity and our various net identities. The project reveals many important social and technical issues. In particular, it highlights the widening gap between the need for privacy versus the desire for publicity. With its scientific orientation, it serves as a solid foundation for future projects in the domain. It is our goal to contribute to social, sociological, and technical research related to digital communities, as well as to artistically oriented projects.

Pianist's Hands - Synthesis of Musical Gestures

PhD Thesis - Stefan Arisona, 2004

The process of music performance has been the same for many centuries: a work was perceived by the listening audience at the same time it was performed by one or a group of performers. The performance was not only characterised by its audible result, but also by the environment and the physical presence of the performing artists and the audience. Further, a performance was always unique in the sense that it could not be repeated in exactly the same way. The evolution of music recording technology imposed a major change to this situation and to music listening practise in general: a recorded performance suddenly became available to a dramatically increased number of listeners, and one could listen to the same performance as many times as desired. However, in a recorded music performance, the environmental characteristics and the presence of the performing artists and the audience are lost. This particularly includes the loss of musical gestures, which are an integral part of a music performance. The availability of electronic music instruments even enforces this loss of musical gestures because the previously strict connection between performer, instrument, and listener is blurred.

This thesis deals with the problem of the construction of musical gestures from a given music performance. A mathematical model where musical gestures are represented as high-dimensional parametric gesture curves is introduced. By providing a number of mathematical operations, the model provides mechanisms for the manipulation of those curves, and for the construction of complex gesture curves out of simple ones. The model is embedded into the existing performance model of mathematical music theory where a musical performance is defined as a transformation from a symbolic score space to a physical performance space.

While gestures in the symbolic domain represent abstract movements, gesture curves in the physical domain reflect “real” movements of a virtual performer, which can be rendered to a computer screen. For the correctness of the movements one has to take into account a number of constraints imposed by the performer’s body, the instrument’s geometry, and the laws of physics. In order to satisfy these constraints a shaping mechanism based on Sturm’s theorem for cubic splines is presented.

A realised software module called the PerformanceRubette provides a framework for the construction and manipulation of gesture curves for piano performance. It takes a music performance and given constraints based on a virtual hand model as input. The resulting output consists of sampled physical gesture curves describing the movements of the virtual performer’s finger tips. The software module has been used to create animated sequences of a virtual hand performing on a keyboard, for the animation of abstract objects in audio-visual performance applications, and for gesture-based sound synthesis.

Keywords: Gestural Performance, Performance Interfaces, Performance Theory, Computer Animation.

PhD Thesis: Multimedia Laboratory, University of Zurich, 2001 - 2004

PhD Candidate: Stefan Arisona

PhD Advisors: Prof. Dr. Peter Stucki and Prof. Dr. Guerino Mazzola